Key takeaways

- Scaling, impact and trust across ecosystems is crucial for AI’s progress

- Traditional partnership models are breaking because “neither the outcome nor the scope is static”

- Customer success must be defined up front, with adoption plans that blend technology and business outcomes

- Trust is a shared operational responsibility, spanning security posture, Responsible AI and realistic expectations

- The best partnerships are built to sustain change through governance, transparency and shared accountability

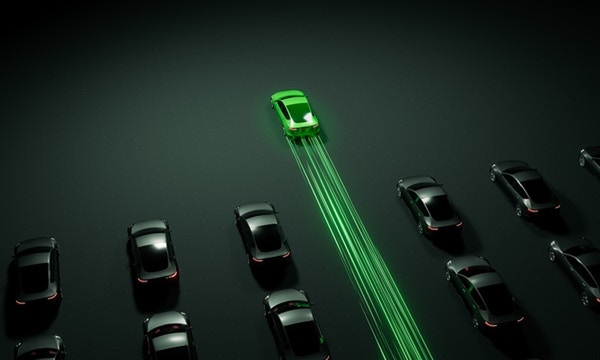

The AI economy is rewriting the rules of growth. Traditional partnerships built around point solutions and static roles are colliding with rapid technological change, geopolitical volatility and rising expectations for measurable outcomes. As Tamara McMillen, Chief Revenue Officer at Economist Group, put it, the challenge is no longer how organizations access AI, but “how we scale it, how we have impact,” while balancing “risk and trust.”

That framed a panel discussion at HCLTech’s pavilion during the 2026 World Economic Forum in Davos. Moderator McMillen noted that despite the attention AI has received, adoption remains limited, with only around 10% of large firms embedding AI at a production level, according to US Census Bureau data cited by MIT. She also pointed to the high attrition rate of experimentation, noting that as many as 95% of AI pilots fail to deliver meaningful business value.

Against that backdrop, McMillen was joined by Abhay Chaturvedi, Corporate Vice President, Tech Industries at HCLTech, Adele Trombetta, Senior Vice President and General Manager of CX EMEA at Cisco and Joshua Shapiro, Vice President, Counter Adversary Operations at CrowdStrike to explore how partnerships must evolve to move AI from experimentation to scalable, trusted impact.

Why traditional partnership models are breaking

Chaturvedi suggested that what’s breaking is not the need for partnerships, but the old assumptions behind them. Historically, “the traditional partnerships were very static,” with fixed boundaries and predictable outcomes repeated over multi-year cycles. In the AI era, “neither the outcome nor the scope is static,” and partnerships must evolve continuously based on customer outcomes and external volatility.

Trombetta reinforced the point with a customer lens, suggesting that customers “do not have a clear strategy and use cases identified that can prove the value of AI.”

The shared implication: partnership models designed for static environments and outcomes struggle when AI use cases, risk profiles and customer expectations evolve quickly.

Working backward from customer outcomes in multi-partner delivery

McMillen stressed a commercial truth that becomes even sharper in multi-partner delivery: “nobody buys your product…they buy it because it’s delivering an outcome.” When multiple companies combine into delivering one end-to-end solution, success depends on aligning what “value” means, how it will be measured and how adoption barriers will be removed.

Trombetta described how customer expectations are changing in the AI era. Customers want providers to move from reactive to proactive, and to deliver “a very much personalized experience.” They increasingly expect guidance because adoption is “not only [a] technology conversation but a lot of human conversations about technology.” Her approach focuses on planning: “we are defining…in advance, what customer value is,” then building “what we call [a] customer success plan,” combining technology steps with business outcomes.

Chaturvedi added that expectation-setting is itself a trust mechanism, especially after repeated tech cycles that promised the world but didn’t deliver on that promise. The fix is disciplined clarity: “we understand the outcome, we drive the expectations around that outcome and we have a plan to achieve that.”

Building trust through security, Responsible AI and realistic promises

Trust surfaced as a shared operational obligation across partners. Shapiro framed the issue through the cybersecurity reality: tool sprawl and fragmentation are eroding defensibility. The average organization “has around 70 security tools,” creating “cognitive overload” for defenders. Tool sprawl increases risk by creating gaps adversaries can exploit.

He also warned that the threat landscape is changing as AI is adopted by attackers and enterprise AI use creates “new attack surfaces.” Adversaries are “using Agentic AI,” and while “the tactics aren’t changing,” the “volume and the scale and the pace” are increasing. That raises the bar for every partner in the chain, because security is a key element of trust within a partner ecosystem. Each organization has the right “people, process [and] technology in place to respond to these threats,” leveraging their partner and vendor ecosystem to “consolidate security tools where appropriate, implement AI systems that scale their own cyber defensive capabilities” and “secure enterprise AI applications to enable safe use” throughout their business.

Trombetta expanded trust beyond security into Responsible AI, naming “data sovereignty,” “accountability” and “ethical AI,” and warning that biased historical data can impact future outcomes. Chaturvedi tied trust to credibility, cautioning against hype that claims AI will solve everything overnight, which only deepens skepticism.

Across these angles, trust becomes a system: secure foundations, responsible governance and promises anchored in deliverable outcomes.

Keeping humans in the loop and choosing the right partners

As AI becomes embedded in day-to-day operations, the challenge shifts from whether it works to whether it is being used well. Speed and efficiency matter, but so does human judgment. Outputs still need to be tested, interrogated and understood, particularly in environments where mistakes carry operational or security consequences.

“You have to keep humans in the loop,” said Shapiro, mentioning that fully automated end-to-end AI implementation “from point A to point Z” is not realistic today. The right approach is using AI for “small sub tasks within broader processes that give you high leverage,” while preserving oversight and training. “Human operators with the right skills” are key.

Trombetta added that readiness now includes interaction skills, not only technical skills: how to ask the right questions and “how do you evaluate, how do you assess the outcomes of the AI.”

On partner selection, Chaturvedi laid out three criteria:

- Avoid “pretend AI” companies

- Assess cultural readiness

- Build for longevity through executive relationships and governance.

Trust and resilience, as mentioned, are central to that model. As he put it, partnerships require “a commitment that if things go wrong, we will help each other,” rather than defaulting to blame.

Shapiro added that the vendors best positioned to win will have “large volumes of unique, high fidelity, training data in highly-specialized and domain-specific areas” to train models, otherwise it’s “garbage in, garbage out.”

Trombetta emphasized a shift from interchangeable augmentation to shared outcomes, deciding together where each partner adds the most value.

What defines a winning partnership in the AI economy

The panel closed with a shared message: AI-era growth is ecosystem growth, but only when partnerships are built to scale, govern and sustain outcomes.

Shapiro explained that organizations need to be “strategic about where we interject AI, across people, process and technology,” and “don’t just blindly throw AI at the problem.”

Trombetta emphasized the broader ecosystem, “that includes the government…the customers…the partners,” and warned that leadership expectations are already changing.

Chaturvedi brought it back to durability: the hardest work is “sustaining partnerships for a long term in a continuously changing environment,” through governance, transparency and mutual support.

McMillen’s closing thoughts captured the point of the new playbook: partnerships matter when they create sustained, “synergistic value creation” anchored in customer outcomes.

FAQs

Why do so many AI pilots fail to deliver enterprise value?

Many start with technology rather than customer outcomes. Without clear use cases, strategy and an adoption plan, proofs of concept stall and never scale.

How are partnerships changing in the AI economy?

They are shifting from static roles to evolving collaboration focused on customer outcomes, shared risk, integrated delivery and the ability to adapt as conditions change.

What does trust mean in multi-partner AI delivery?

Trust is operational. It includes security posture, Responsible AI controls, data sovereignty, clear governance and realistic expectation-setting so weak links don’t undermine outcomes.

Why does cybersecurity tool sprawl matter for partnership risk?

With around 70 security tools, teams face cognitive overload and fragmented visibility. Consolidation and AI-driven analysis can reduce cost and risk while improving response speed.

What should leaders look for when selecting AI partners?

Choose partners with real investment, cultural readiness, strong governance and strong data foundations. Avoid those pretending to deploy AI and partnerships without shared outcome alignment.

Artikel anhören

Artikel anhören