This blog demonstrates how Amazon Bedrock Agents powered by Nova-Pro LLM can accelerate the modernization of insurance claim management systems from legacy mainframes to cloud-based platforms like Guidewire. The solution automates data analysis, transformation, and migration tasks, benefiting various roles in the software development lifecycle. Though the case study focuses on insurance claims migration, this solution approach creates a blueprint for any enterprise undertaking mainframe modernization initiatives across industries.

Business challenge

One of the large global insurers was embarking on a strategic claim management modernization program to improve the customer experience by migrating to a new robust claim management system. The modernization program required large volume of claim data to be extracted from mainframe-based claim management system, transformation and migration to Guidewire relational database such as Oracle and MS SQL Server. The source data included policy claim data with attributes such as insurance account number, claim number, policy details, incident date, incident description and other related details.

The customer sought an accelerator to help reduce migration time and improve the productivity of the program team.

Solution Approach leveraging Agentic AI

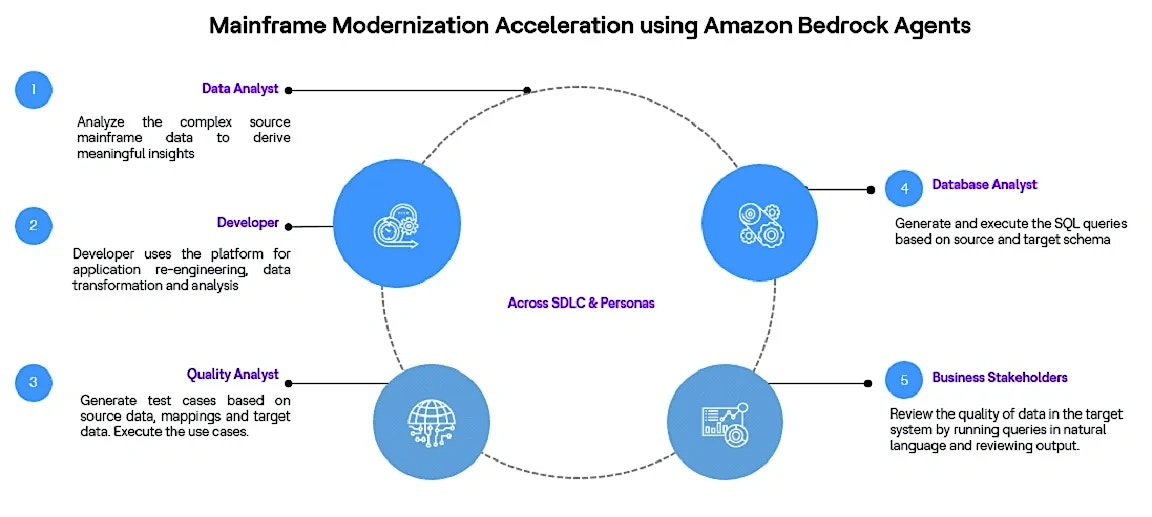

The solution was conceptualized with an objective to accelerate the migration program by enhancing the productivity of diverse personas working on the program across the Software Development Life Cycle (SDLC).

Here are few key personas involved in the program and how the solution aimed to improve their productivity.

Data analyst - Use the accelerator to analyze the complex source policy management data, generate business logic and rules from existing code to help prepare required business documentation.

Developer - Utilize the platform for application re-engineering, data transformation and analysis, generate source-target mapping files, ETL scripts or code transformation from Cobol, JCL to Java or any other programing language.

Quality analyst - Generate test cases based on source data, mappings and target data. Execute the use cases and generate results.

Business stakeholders (UAT) - Review the quality of data in the target system by running queries in natural language and reviewing output.

Solution architecture on AWS

The solution was designed and developed with following core objectives in mind.

- Ability to analyze and query large volume of source data for ease of analysis

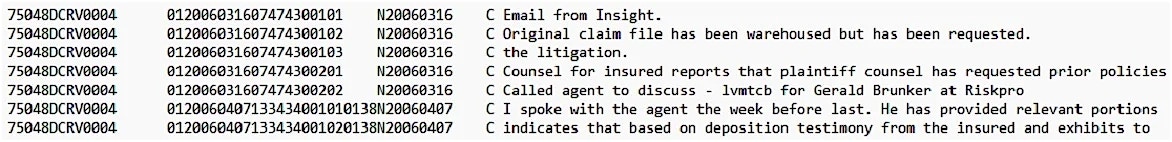

Bedrock Transformation Agent driven by Amazon Nova Pro LLM was used to analyze the source data using a combination of the files extracted from the legacy system with details such as account number, claim details, policy details etc. and data definition file describing the details about the data attributes in the source legacy system file.

Sample source file from legacy systems

| Field Number | Field Description | Field Definition | Field Length | Start Position | End Position | Field Positions |

|---|---|---|---|---|---|---|

| 001 | Account | Pic X(5) | 05 | 0001 | 0005 | 0001 - 0005 |

| 002 | Record Type | Pic X(1) | 01 | 0006 | 0006 | 0006 - 0006 |

Sample data definition file providing details of the source file

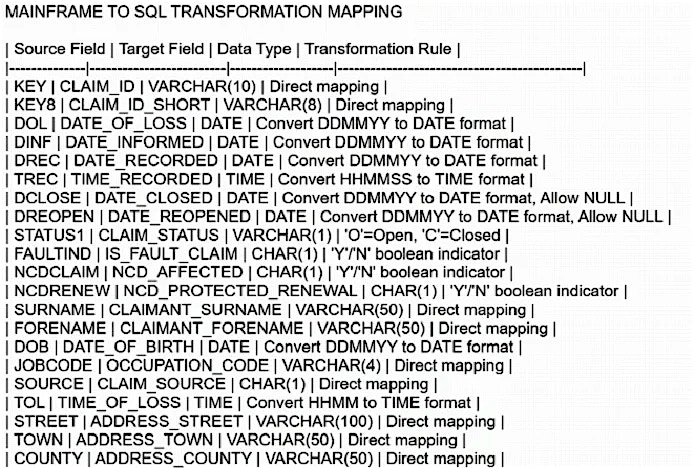

- Generate transformation mapping, ETL scripts and code transformation

Transformation mapping and ETL Scripts were generated to transform the source data to the target schema of the Guidewire system using the Bedrock Transformation Agent. Once the source file data, data definition file and target database schema were provided to the Agent, the Agent is able to generate the transformation mapping and ETL scripts.

Transformation mapping generated by the Agent

The agent can also be used for code transformation from Cobol, JCL to Java or any other programming language.

- SQL query generation for data insertion to backend relational database system

The agent was able to generate SQL queries to insert the data into target schema - relational database without manual intervention using the aatabase agent. The Agent can be used to generate ETL scripts which can used during migration, if the volume of data is large and cost escalation instead of directly inserting data.

Solution components overview

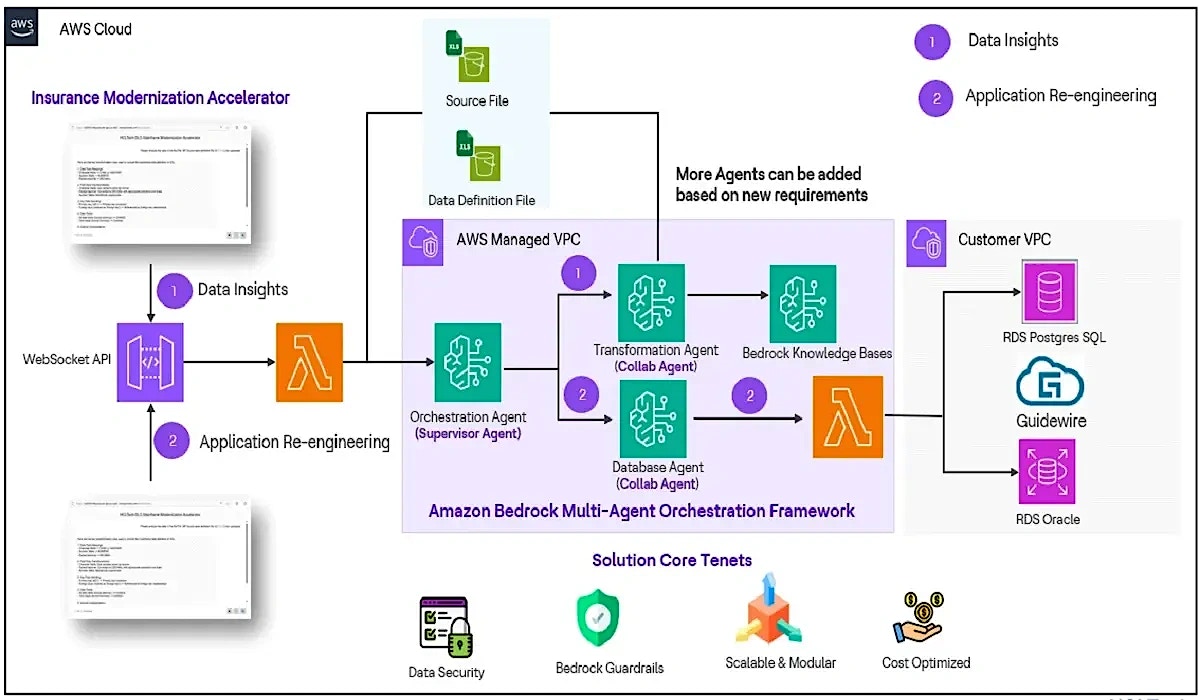

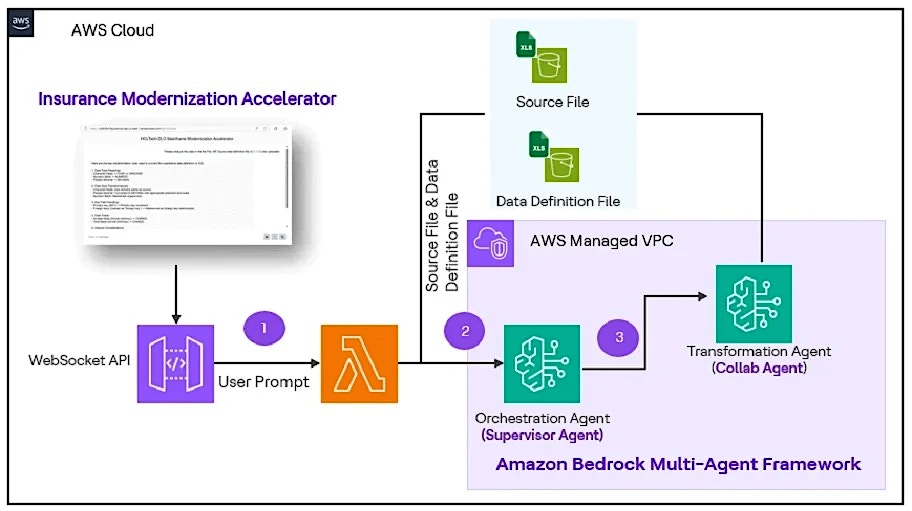

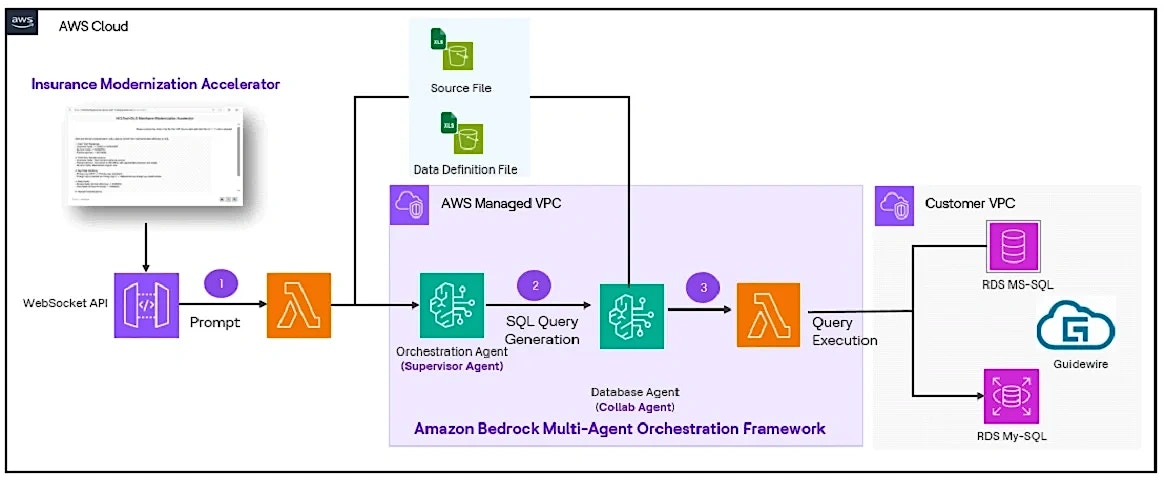

The overall solution has been used built using Amazon Bedrock Multi Agent Framework, Amazon Nova Pro, AWS Lambda, Web socket API, S3, React UI and Database (RDS).

Amazon Bedrock Agents – The solution utilizes the Amazon Bedrock Multi-Agent Framework and has three agents.

- The Orchestrator Agent (Supervisor) focuses on routing and collaboration between different agents.

- Transformation Agent (collaborator) analyzes the data for insights and generates the transformation logic – mapping, ETL scripts, code transformation.

- Database Agent (collaborator) generate SQL queries to insert data to relational DB.

Large Language Model – The Agents leverages Amazon Nova Pro.

Interactive react UI – Interactive React UI provides a chat interface hosted on ECS Fargate.

AWS Websocket API – Acts as a persistent chat interface to route communication between user and Bedrock Agents which are deployed on the API gateway.

AWS Lambda – The user input received via web socket API is sent to agents by calling invoke Agent API.

Security – Below are the attributes considered for building a robust secure solution.

| Security domain | Implementation strategy and AWS services |

|---|---|

| Responsible AI – Toxicity, PII redaction, Hallucination | AWS Bedrock Guardrails for toxicity control, PII redaction and contextual grounding to detect and mitigate hallucinations |

| Data at rest | AWS Key Management Service (KMS) for encrypting data in Amazon S3 and RDS databases. |

| Data in transit | AWS PrivateLink with VPC interface endpoints for secure AWS service-to-service communication; TLS encryption for all client-service interactions. |

| Authentication and authorization | Amazon Cognito (or similar enterprise solution) for secure user identity management and authentication flows; IAM for fine-grained permission control with role-based access |

| DDoS protection | Multi-layered defense with AWS Shield for network layer (L3/L4) protection and AWS WAF for application layer (L7) filtering against sophisticated attack vectors |

Usage scenarios

Here are few scenarios on how the solution works:

Persona 1 – Data analyst

- The source mainframe file is extracted and uploaded to a source folder in S3 bucket.

- Data definition file defines the attribute names in the source mainframe file such as customer id, policy number, policy date and so on.

- Now let’s assume, data analyst needs to analyses the source mainframe file. He can either upload the source and data definition file using a UI developed with React or upload on the S3 bucket.

- Next, analyst provides the prompt along with the source file. This data is sent to the web socket API which passes on the data to lambda function as an event.

- The lambda function uses the invoke Agent API to send the input to the orchestrator Agent.

- The orchestrator agent routes the request to Transformation Agent which uses the underlying LLM to do analyses and generate insights based on the prompt and source data.

- A similar approach can be used for business rule extraction based on the available existing legacy code.

Persona 2 - Developer

- The key task for the developer is to use the source mainframe file as an input, transform the data – mapping file, ETL Scripts and insert the data in the backend DB.

- The developer uploads the source file, data definition file and prompt through the UI.

- This input is passed to web socket API and then to AWS Lambda which triggers the invoke Bedrock Agent API.

- Once the inputs are received by orchestration agent, it routes the request to Transformation Agent which generates the mapping logic based on the source and target schema and sends back response to the user.

- If the developer requests insertion of transformed data, the database Agent generates the SQL queries and uses the attached action group to insert the data in the RDS database.

- Using a similar flow, the solution can be used for code transformation.

Solution benefits and future enhancements

Compared to the traditional migration approaches for large scale mainframe modernization, the solution accelerator can reduce the migration timelines by upto 30%.

The solution accelerator is being further enhanced to improve the contextual understanding of the Agentic solution and generate custom output based on customer specific environment variables such as available documentation, existing code and other such parameters. Other enhancements include integration with machine learning models for automated reconciliation between source and target systems and enabling other components of mainframe modernization like VSAM file migration and so on.

Challenges and issues

While implementing the solution, in case of large source file data, the AWS Websocket API can hit maximum file size limit which can be mitigated by accessing the data via S3.

In case of very large volume of data for migration, the cost for generating SQL query and data insertion into Guidewire system can be prohibitive and in those scenarios, part of the task can be managed using ETL. The agent can generate these scripts.

Conclusion

The solution demonstrates how we can build a comprehensive platform to accelerate the migration of policy management using the Amazon Bedrock Agents and Nova-Pro LLM. This solution can be further customized to enhance customer experience delivery across a variety of migration use cases in the insurance industry.

For more detailed information, a demonstration, or to implement this solution, please reach out to our team of experts: awsecosystembu@hcltech.com