Abstract

The expanding landscape of autonomous agents promises to revolutionize business workflows across industries. To truly unlock their potential, these agents must be able to communicate and collaborate effectively. Early agent implementations often relied on ad-hoc solutions for communication, leading to fragmented ecosystems and limited interoperability. This prompted the development of standardized protocols, such as Anthropic’s Model Context Protocol (MCP) and the recent introduction of Google Cloud's Agent-to-Agent (A2A) framework. The launch of A2A represents a significant shift toward standardization, connected and collaborative agent-driven environments, paving the way for more complex and effective agent-driven solutions.

Historic look back

Since their inception, LLMs have proven influential in generating information. However, they are often limited by the data they were trained on, restricting them from fetching real-time, contextual data. Agentic apps shouldn't live in a vacuum; they need to interact with the world and provide the latest information to make their responses more meaningful and relevant. Even the most advanced LLMs can only offer static, outdated or generalized answers without the ability to access real-time data.

Moreover, LLM-powered agentic apps become even more powerful when they offer personalized responses based on user-specific data or preferences. By scraping data from the internet, staying updated on current events, providing real-time weather information and financial data, querying databases and accessing customer profiles, agentic apps stay relevant and perform tasks that require a current, dynamic context. As the need for real-time information and dynamic contextualization grew, developers began creating integration touchpoints to enable LLMs to communicate with external systems.

This brought its own set of challenges. Initially, many solutions were developed without interoperability, as systems were hardcoded and tightly coupled to specific agent architectures and models. This restricted information sharing makes it difficult for agents built on different platforms or models to collaborate or exchange data. Additionally, such tightly coupled systems posed scalability issues, as integrating new tools or services often required significant rework or bespoke solutions.

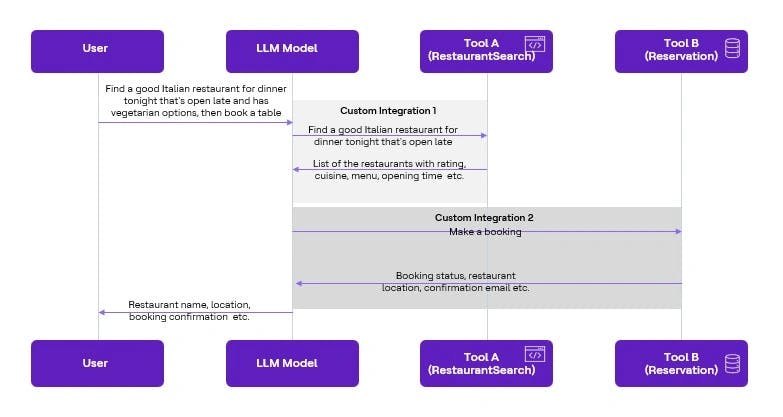

Here's a simple example: A user wants to book a good Italian restaurant for dinner that is open late and has vegetarian options. Here’s what the flow of information would look like:

While integrating external data sources and APIs was necessary, early implementations faced challenges in creating a flexible, scalable infrastructure that could accommodate the fast-evolving nature of AI technologies. This created bottlenecks for developers and businesses looking to scale their agentic apps across different systems, tools and use cases. The need for a standardized, flexible framework, enabling smooth interaction between diverse systems and data sources, became apparent.

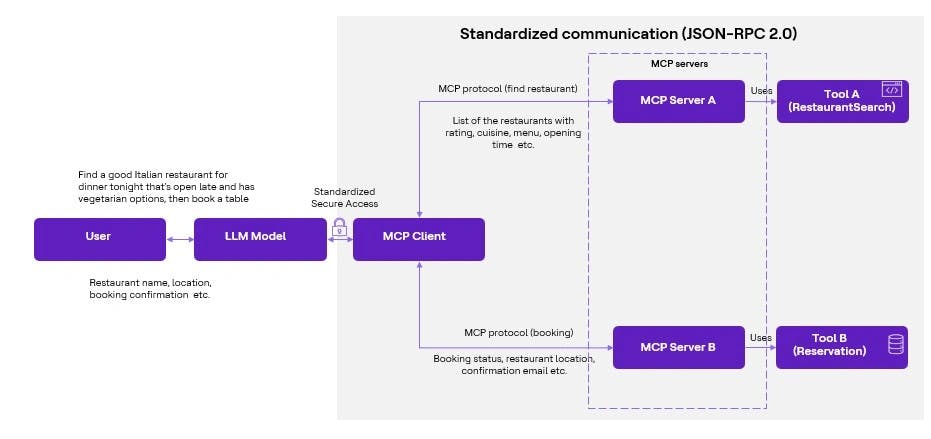

MCP standardizes the connection

Model Context Protocol (MCP), released by Anthropic in November 2024, this technology is positioned as a new open standard for standardizing how context is provided to AI applications [1]. It is a universal adapter, enabling agents to ground their reasoning and actions in relevant, real-time information. MCP establishes a secure, standardized and efficient two-way connection between GenAI apps (MCP clients) and enterprise data sources (accessed via MCP servers) built upon JSON-RPC 2.0 for communication through a host, which manages the overall lifecycle, security policies and coordinates the integration of context from various sources for the AI model.

This illustration shows how LLMs interact with external tools, APIs and data sources. MCP brings standardization and addresses the interoperability and scalability challenges, making it easier to build more dynamic, adaptable and robust agentic apps. Developers can now focus on creating intelligent systems with reduced hallucinations that collaborate across platforms. They are accurate, able to access real-time data and offer personalized, context-aware responses that evolve with the environment and the user’s needs.

Agentic evolves: the A2A imperative [2]

Individual agents are designed with specific skills and expertise, allowing them to focus on particular aspects of a task. Many real-world problems are too complex for a single agent to handle effectively. Individual Agents may have access to respective resources, such as data, sensors, APIs, etc., which, when shared, could lead to problem-solving in a larger context. For example, one agent might have access to real-time weather data, while another has the expertise to predict energy demand. By collaborating, they can optimize energy production based on predicted weather conditions. The ability for agents to exchange information and share perspectives often leads to a more thorough response to achieve a common goal.

A2A, launched by Google Cloud with broad industry support [3], focuses on standardizing communication between AI agents. It provides a common language and framework for agents to discover each other, delegate tasks, negotiate capabilities and coordinate collaborative workflows across diverse platforms and vendors. A2A is an open protocol designed to allow AI agents to communicate with each other, safely exchange information and coordinate actions across various platforms, regardless of their underlying frameworks or origins.

While MCP addresses the agent-environment interaction and A2A addresses the agent-agent interaction, the real power lies in their synergistic combination. Using both protocols, developers can create sophisticated multi-agent systems (MAS). These are the characteristics of MAS:

- Groups of specialized agents collaborate on complex tasks using A2A for coordination and communication.

- Individual agents within the group leverage MCP to access the specific tools, APIs and data required to execute their assigned sub-tasks effectively.

This layered approach fosters modularity, enhances agent capabilities, improves system reliability and accelerates the development of truly interoperable and intelligent AI agent systems.

A2A architecture [4]: The agent card

A2A employs a client-server architecture for interaction between agents. A fundamental requirement for agent collaboration is the ability of agents to find each other and understand their capabilities, which is facilitated through the Agent Card. The client agent helps to initiate a request or delegate a task to another agent. In contrast, the server agent (or remote agent) exposes its capabilities via an HTTP endpoint, receives requests from client agents and executes the requested tasks. The Agent card is the most essential element required to structure agent interactions. Agent card is a standardized JSON metadata file that acts as an agent's public profile or digital identity, containing essential information like the agent's ID, name, description, capabilities/skills, endpoint URL, authentication requirements, supported modalities and potentially MCP support details.

A2A leverages standard, widely adopted web protocols for communication, promoting easier integration with existing infrastructure. The primary transport is HTTP(S) for request-response interactions. The message payload format is defined by a JSON specification, potentially utilizing JSON-RPC 2.0 principles for structuring remote procedure calls.

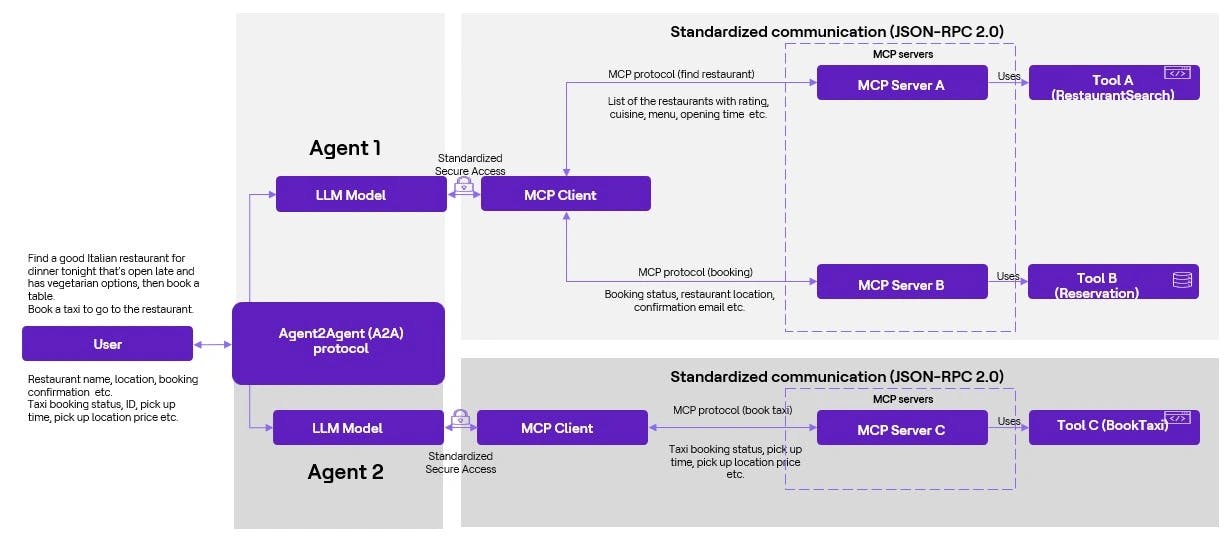

Let’s go back to the restaurant selection. The user wants to book a good Italian restaurant for dinner tonight that's open late and has vegetarian options and after dinner, wants to book a taxi to go home. A2A simplifies the request and improves the results between two independent systems.

Agent 1 - Restaurant search and reservation: The agent receives the request, communicates with a specialized Restaurant search platform through the MCP server and suggests a few vegetarian restaurants based on their rating, etc. Through another MCP server, it further checks the availability in those restaurants to find suitable options based on the requested criteria and confirms the booking. It then relays the confirmation back to Agent 1.

Agent 2 - Taxi booking: Once the restaurant booking is confirmed, another agent now knows the approximate time and location for the taxi pick-up. It sends a booking request to another MCP server (Ride-Sharing Services) to handle the actual ride request, assigns a driver and provides real-time updates back to the agent.

This example of a complex, multi-step user request illustrates how two distinct agents, residing on separate platforms, interact with various platforms, leveraging A2A to provide cross-platform agent orchestration.

Integration with agent development frameworks like Google Cloud's Agent Development Kit (ADK), CrewAI, LangGraph, LlamaIndex, Semantic Kernel and others will simplify the implementation of A2A-compliant agents. While MCP and A2A serve distinct purposes, their real potential emerges when used in concert. They are not competing standards but complementary protocols addressing different, essential layers of interaction required for building sophisticated, autonomous AI agent systems.

The adoption of the A2A protocol presents compelling business advantages for enterprises:

- Enhanced workflow automation and operational efficiency: A2A facilitates more autonomous and streamlined multi-agent workflows, significantly reducing manual intervention and improving task coordination across various business functions.

- Reduced integration complexity and cost: By providing a standardized communication framework, A2A eliminates the need for costly, proprietary integrations between disparate AI systems, lowering development and maintenance overhead.

- Improved scalability and flexibility: A2A enables enterprises to build modular, scalable AI ecosystems, easily adding new agents and capabilities while supporting cross-platform and cross-vendor interoperability for greater adaptability.

- Strengthened security and compliance: By leveraging established web security standards and incorporating features like agent opacity and robust authentication, A2A enhances the security posture and suitability of multi-agent environments.

Business benefits from A2A

The practical application of A2A for workflow automation is evident across various business domains; a few examples are below:

- Loan processing: A primary Loan processor agent can initiate a loan application task. Using A2A, it can discover and delegate sub-tasks to specialized agents: a risk assessment agent evaluates borrower risk, a compliance agent checks regulatory adherence and upon approval, a disbursement agent schedules the fund transfer. Each agent operates autonomously, exchanging necessary information and status updates via A2A, streamlining the end-to-end approval process to increase efficiency.

- IT helpdesk: An employee reports a laptop issue via an internal IT agent. This agent can use A2A to coordinate a resolution. It might first query a hardware diagnostic agent. If that agent identifies a software issue, the user agent could invoke a Software Rollback Agent. If the problem persists, a Device Replacement Agent could be tasked to initiate a hardware swap. Each agent leverages its specific expertise, communicating asynchronously via A2A, leading to faster and more automated issue resolution.

- Supply chain optimization: Using A2A, a network of AI agents can help manage demand fluctuations for real-time data sharing and collaborative decision-making. For example, a demand forecasting agent detecting a surge could trigger an A2A task for the inventory agent to check stock and simultaneously task the procurement agent to adjust purchasing strategies, all without manual intervention.

- Financial trading: In high-frequency trading scenarios, multiple agents can monitor different market segments, analyse risk factors and collaboratively execute trades based on shared insights communicated via A2A, enabling split-second decision-making.

- Customer service: A front-line chatbot or customer service agent encountering a complex query beyond its scope can use A2A to dynamically discover and route the issue to a specialized technical support agent or a product expert agent. This improves first-contact resolution rates and customer satisfaction by efficiently connecting customers with the right expertise.

Conclusion

The Agent2Agent (A2A) protocol represents a fundamental paradigm shift towards a future where AI operates not as a collection of isolated tools but as a dynamic, collaborative ecosystem of intelligent agents. Enterprises that proactively adopt standardized communication protocols like A2A will be better positioned to navigate this transition effectively. By breaking down internal AI silos and fostering a truly interoperable ecosystem, organizations can unlock the collective intelligence of their AI investments. As AI becomes increasingly embedded within enterprise operations, the ability of these systems to communicate, coordinate and collaborate effectively will be a defining factor in organizational success.