Key takeaways

- AI Factories turn data into outcomes, with repeatability as the differentiator

- The ecosystem matters because no single provider can deliver the full end-to-end chain alone

- Data locality drives architecture: mega-scale, distributed edge and “AI PC” all play roles

- Real ROI comes from clear business cases, including safety, throughput and productivity

- Adoption depends on simplifying the experience for end users, not exposing complexity

- Agentic value scales when subject matter experts stay central and workflows become “human changeable”

At HCLTech’s pavilion during the 2026 World Economic Forum in Davos, a roundtable with Dell Technologies focused on a shift many enterprises are now feeling acutely: AI is no longer the question. Execution is. Data is abundant, decisions are time-sensitive and early experimentation is giving way to pressure for repeatable delivery. The idea of an AI Factory offered a useful framing. Not as a buzzword, but as an operating model, a way of manufacturing intelligence consistently, securely and at scale.

The discussion comes at a moment when HCLTech is publicly signalling a sharper strategic pivot toward AI-led growth. Speaking in Davos this week, the company’s leadership pointed to AI factories and Physical AI as emerging growth engines that could drive AI-related revenues to $2.5 billion over the coming years, helping offset pricing pressure in legacy services while unlocking new discretionary enterprise spending. This context gave added relevance to the roundtable’s focus.

Dell leaders framed the Dell AI Factory as the bridge between raw inputs and business value, while HCLTech’s Chief Technology Officer and Head of Ecosystems, Vijay Guntur, grounded the AI Factory discussion in outcomes. Across industrial operations, edge environments and emerging agent-led workflows, the recurring theme was simple: start with what you are trying to change, then assemble the stack to make that change repeatable.

Below are six of the most important learnings from the discussion.

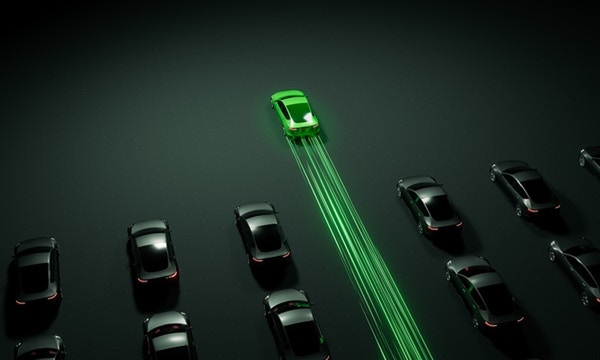

1. AI Factories are infrastructure, software and services that turn data into outcomes

Dell Technologies’ Kyle Leciejewski, Senior Vice President, North America Sales and Customer Operations, offered a clear, simple definition: “it’s a stack of infrastructure, software and services that have data as an input and outcomes as the output.” That framing matters because it moves the conversation away from tools and toward repeatability. Many organizations can assemble one-off pilots. Far fewer can standardize the journey from data; to models, to production workflows and to measurable impact.

Across industries, the use cases varied sharply. Examples discussed ranged from code assistance and productivity gains in software development to computer vision improving yield and quality in manufacturing, faster imaging analysis in healthcare, and digital agents connecting citizens to public services.

Looking at the overall impact, Guntur said that: “AI Factories manufacture intelligence [and] there are many ways for that intelligence to be manufactured.” The mechanism can vary by deployment model, but the goal is consistency. “Focus on the outcome,” he said. The factory is “a means to an end,” not the end itself.

2. Outcomes, not architecture, should decide how the factory is built

A recurring theme was that an AI Factory is not a single reference architecture. It is a configurable system that must be designed backward from a business goal. Guntur emphasized this repeatedly: “The way your factory is constructed is based on your need.” If the requirement is privacy, sovereignty or full control, the configuration shifts. If the need is speed, scale or elasticity, the configuration shifts again.

Dell Technologies’ Chief Partner Officer, Denise Millard, built on that by anchoring Dell AI Factories in real customer work. She highlighted how outcomes often require multiple ingredients working together, such as “video surveillance...software...management [and] infrastructure” delivered as one cohesive solution. In one example, a customer’s operational goal was concrete and measurable: “to get more containers on the ships.” The result was equally specific: “they saw the ability to ship more.” That outcome only happened because the ecosystem was assembled as an integrated delivery motion, not purchased as isolated components.

Across the conversation, the underlying takeaway was pragmatic: AI Factories are most useful when it makes execution repeatable, reduces friction and keeps the business case visible throughout delivery.

3. Multicloud and multi-model reality makes the ecosystem the strategy

Millard highlighted the operating reality enterprises now face: “we believe it’s a multicloud...and multi-AI world.” In practice, that means intelligence will be manufactured across SaaS platforms, public clouds and on-prem environments simultaneously. For most organizations, the challenge is less about choosing a single destination and more about governing the range of environments.

In that context, partnerships become a mechanism for bridging environments. Millard pointed to “building those bridges” so customers can run where they need to run. The discussion also reflected the market drivers behind this: privacy, security and sovereignty requirements are shaping deployment choices in ways that make a single-cloud strategy unrealistic for many.

“No one company can do this whole stack,” added Guntur, describing innovation pressure across silicon, GPUs, data centers, edge devices and model layers. The AI Factory model, in this view, is not just a technical stack but a coordination model that lets enterprises benefit from specialized providers without needing to become system integrators themselves.

4. Edge is not a footnote; it is where much of the real-world data is created

One of the strongest clarifying moments came when Leciejewski tied architecture directly to data locality. He said: “A majority of the world’s data is not in some centralized cloud. It’s on-prem. And a lot of the new data that gets created is not going to get created in a public cloud or in a data center. It's going to get created out in the real world at the edge point.”

He added: “We think the compute will ultimately follow the data.” Set up an AI Factory model that spans:

- Mega-scale data centers and “neo cloud” environments

- Distributed edge locations making localized, real-time decisions

- And the far edge, where “AI PC” becomes part of the delivery story

This breadth is a practical advantage because enterprise AI use cases rarely live in one place. Data is produced in factories, ports, retail locations and devices. Intelligence must be manufactured where latency, connectivity and operational risk demand it.

Even when the infrastructure exists, the harder problem is connecting the data safely across internal silos. The workflow for linking disparate sources brings governance, control and security challenges that require partner capability, not just hardware.

5. ROI fails when the business case is unclear, even if the technology improves

The group returned repeatedly to how organizations invest time and money and fail to get what they expected, not because models cannot perform, but because the business case or outcome was never defined tightly enough.

"You didn’t start well [if you weren’t] thinking about what the business case was in the first place,” said Guntur. He did acknowledge, however, that costs will drop and performance will improve, but argued the determining factor is still whether a use case “really motivates the organization to investment and [embrace] the hard part of the change.”

The roundtable also viewed ROI beyond the productivity path. In some cases, the return is obvious in conventional terms: throughput, yield, labor efficiency or reduced downtime. In other cases, the value is existential. Guntur pointed to safety-driven outcomes: if a use case prevents fatalities, the ROI conversation changes shape.

This is where AI Factories become more than a technology construct. They become a discipline for selecting problems worth solving, defining success metrics upfront and building an execution pattern that can be repeated.

6. Agentic value scales when workflows stay human-centered and usable

Agent-led workflows were discussed as the final trend; positioning agents as a support system, rather than replacements for expertise. A participant highlighted that scaling agentic execution is about enabling subject matter experts, capturing how they work and keeping them in control of workflows as conditions change.

This approach is especially relevant for regulated, high-stakes environments: decision workflows become system-readable but remain “human changeable.” In other words, the point is not to automate judgment out of the system, but to reduce the time experts waste organizing information so they can focus on the decisions only humans can make.

Guntur echoed this in a different way, emphasizing simplicity is the key to adoption and for AI to become pervasive and useful. The most advanced stack in the world will not matter if it cannot be used easily by the people closest to the work. An AI Factory becomes transformative when it is configured so end users can act on outputs without needing to understand the complexity underneath.

Making intelligence manufacturable at scale

The AI Factory message that landed most clearly was also the simplest: intelligence is being manufactured at scale, but only when leaders treat execution as an operating discipline, not a one-off initiative.

The organizations that win will not be the ones with the most pilots. They will be the ones that can standardize how intelligence is built, deployed, governed and improved across industrial systems, edge environments and agentic workflows, then prove that value in the language the business cares about.

FAQs

1. What is an AI Factory?

An AI Factory is an operating model that turns data into usable intelligence through repeatable infrastructure, software and workflows, rather than one-off experiments or isolated deployments.

2. How is an AI Factory different from traditional AI projects?

Traditional projects focus on individual use cases. An AI Factory standardizes how models are built, deployed and run so intelligence can be produced consistently at scale.

3. Where does AI Factory deliver the most value today?

It shows strongest impact in environments with abundant data and time-sensitive decisions, including industrial operations, edge locations, supply chains and customer-facing workflows.

4. Does AI Factory mean everything moves to the cloud?

No. AI Factories can span public cloud, on-premises and edge environments, with compute following data locality, latency requirements and regulatory constraints.

5. What determines whether an AI Factory succeeds?

Clear outcomes, clean and accessible data, strong governance and partners that can integrate infrastructure, models and workflows into systems people use.