Executive Summary

Media companies maintain archives exceeding 20 petabytes, often stored on physical tapes that remain largely inaccessible. This creates significant challenges: lost licensing revenue opportunities, operational inefficiencies and extended content retrieval times. HCLTech Media-IQ, built on AWS, transforms these "digital attics" into intelligent, AI-driven content platforms. By leveraging Amazon Bedrock for content understanding and Amazon SageMaker for predictive analytics, the solution reduces restore times by up to 90% and converts passive storage into active revenue streams.

Introduction

The global demand for content has never been higher. To feed 24/7 streaming platforms and social media channels, studios are racing to produce new shows. However, the most valuable assets often already exist. Decades of film, television and sports footage sit trapped in LTO tape libraries, which are difficult to search, slow to retrieve and expensive to maintain.

HCLTech is partnering with AWS to solve this "content paralysis." By moving from rigid tape infrastructure to the cloud, studios can pivot from a defensive posture (keeping data safe) to an offensive one (using data to monetize). This shift turns the archive from a cost center into a content factory. Moving petabytes of film masters to the cloud is no longer just about "cheap buckets." It’s about asset liquidity.

The Need

The media industry faces a "silent crisis" of accessibility. Traditional archives rely on physical tape robots and offline storage, creating three distinct pain points:

- The "black hole" of metadata: Tapes often have poor labeling. Editors know a shot exists "somewhere in the 1998 archives," but finding it requires physical retrieval and manual scanning, often taking days

- Retrieval latency: In a news or sports cycle, speed is everything. Waiting 24-48 hours for a tape to be robotically loaded and transferred is too slow for modern production workflows

- Cost unpredictability: Legacy systems suffer from "CapEx shocks" - massive investments required every few years to replace aging drives and libraries, regardless of business performance

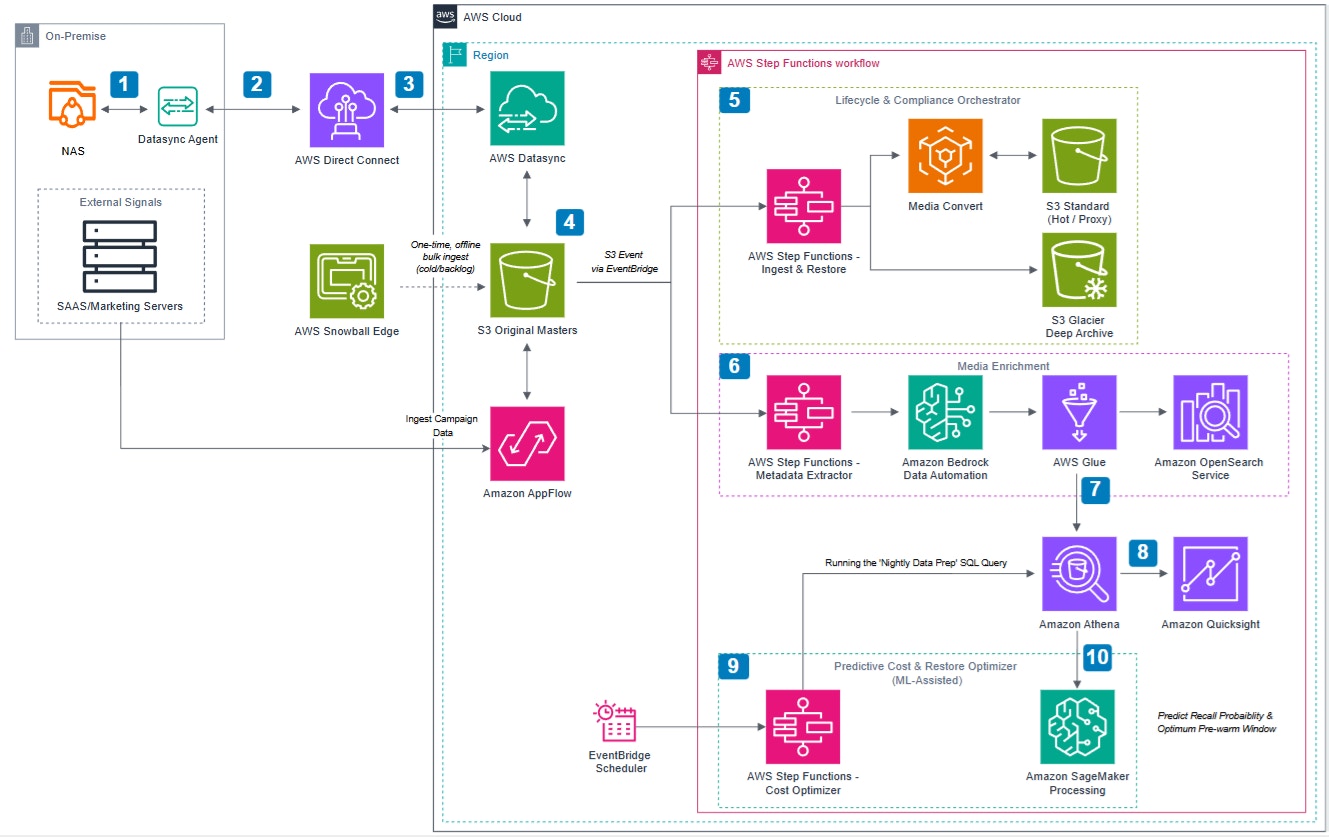

Solution Architecture

HCLTech Media-IQ transforms traditional archives into automated media supply chains. The solution combines Amazon S3's durability with AWS generative AI services to separate content discovery (always searchable) from preservation (cost-optimized storage).

Media-IQ serves as an intelligent supply chain orchestrator. Our unique edge is defined by three core financial and operational pillars:

- Financial optimization ("touch-and-go"): Content lands in Amazon S3 Standard for immediate AI processing, then transitions to Amazon S3 Glacier Deep Archive. This approach bypasses minimum storage duration penalties while maintaining processing efficiency

- Decoupled discovery and retrieval: During ingestion, Media-IQ creates lightweight proxy files in hot storage while archiving master files. Editors can search and preview the entire library instantly without retrieval fees or wait times

- Automated governance: AWS Step Functions orchestrate lifecycle policies, while Amazon S3 Object Lock provides immutable preservation that prevents accidental deletion or unauthorized data movement

The AI intelligence layer

Media-IQ's differentiation comes from its AI-powered value extraction, built on Amazon Bedrock and Amazon SageMaker:

- Multimodal asset intelligence with Amazon Bedrock: Amazon Bedrock Data Automation analyzes video content to extract rich context - identifying people, objects, scene characteristics (e.g., "tense car chase") and technical specifications. This transforms generic filenames into searchable business assets with deep metadata

- Semantic search capabilities: Using vector embeddings stored in Amazon OpenSearch Service, creative teams search by concept or intent (e.g., "90s Tokyo Street style") rather than filenames. This unlocks previously invisible licensing opportunities for historical footage and B-roll content

- Predictive content delivery with Amazon SageMaker: By correlating internal access patterns with external business signals (marketing calendars ingested via Amazon AppFlow), Amazon SageMaker forecasts content demand. The system automatically "pre-warms" assets from deep archive storage ahead of franchise anniversaries or campaigns, eliminating production delays

Technical Implementation

- Content on the NAS is read over NFS/SMB by a local AWS DataSync agent, which parallelizes transfers, performs end-to-end integrity verification and sends only changed blocks so subsequent runs are incremental

- The agent streams those parallel lanes over TLS via AWS Direct Connect circuit, shaping bandwidth as needed so production traffic is not impacted

- At the AWS endpoint, DataSync writes into the target S3 prefix while applies object tagging that will drive lifecycle, search and reporting

- Files are stored durably with versioning and SSE-KMS. Each object landing emits an S3 Event (via EventBridge) that triggers the downstream workflows

Side path: Snowball Edge can bulk-ingest backlog data into the same bucket/prefix. Side path: Snowball Edge can bulk-ingest backlog data into the same bucket/prefix - Implements a 'Mezzanine' architecture within a single logical bucket. Upon ingest, Step Functions trigger Media Convert to generate a lightweight H.264 Proxy for instant global preview, stored in S3 Standard. Simultaneously, the workflow executes an 'in-place' transition of the high-res Original Master to S3 Glacier Deep Archive (Master Vault) for long-term preservation

- Orchestrates Content Intelligence. Invokes Amazon Bedrock to extract Descriptive Metadata (Scene, Actor, Sentiment) and Lambda for Technical Metadata (Codec, Bitrate). Indexes these assets in OpenSearch (Vector Store) for semantic discovery

- Athena uses the Glue Data Catalog to run SQL directly on S3 Warm, to query metadata

- QuickSight connects to Athena to create dashboard - ingest trends, warm↔cold ratios, restore SLAs, access patterns and cost/ROI without moving the data out of S3

- Nightly Step Functions job orchestrates data prep via Athena to ensure governance and invokes ML scoring to predict likely recalls and evaluate lifecycle policies. Applies approved actions (pre-restore to Warm; update lifecycle rules), writes a full audit to S3,and exposes savings & SLA impact inQuickSight. Budget caps + optional human approval enforced

- Ingests External Signals via Athena into SageMaker to predict recall patterns and Pre-Warm assets from Deep Archive before campaigns launch

The HCLTech solution goes beyond simple storage, deploying a modular "Media Operating System" that handles the nuances of complex video workflows:

1. The ingestion pipeline:

AWS DataSync over AWS Direct Connect handles complex media structures with integrity verification, ingesting content to Amazon S3 Standard for AI processing. Automated lifecycle policies then seamlessly transition master files to Amazon S3 Glacier Deep Archive for cost-optimized storage.

2. Proxy-based workflow architecture:

AWS Elemental MediaConvert generates lightweight H.264 proxy files upon ingestion, which remain in Amazon S3 Standard for instant preview capabilities. Amazon S3 Range Get requests enable editors to restore specific byte-ranges of master files, reducing egress costs while maintaining rapid access to required content segments.

3. AI-powered content curation:

AWS Lambda functions extract technical metadata including codec, frame rate and bitrate information from ingested content. Amazon Bedrock performs multimodal analysis to identify celebrities, objects and scene characteristics such as mood and action (e.g., "a tense chase in rainy Tokyo"). These insights are converted into vector embeddings to enable semantic search capabilities, allowing editors to search by concept such as "high-speed action" and discover clips that were never explicitly tagged with those terms.

4. Predictive restoration with Amazon SageMaker

AWS Step Functions trigger Amazon Athena queries nightly to analyze access patterns, while Amazon AppFlow ingests external business signals such as marketing calendars and campaign schedules. Amazon SageMaker analyzes these combined datasets to predict content demand and automatically pre-warms assets from Amazon S3 Glacier Deep Archive before creative teams request them.

5. Immutable compliance vault:

We implement a "Vault Account" architecture where backup copies are stored in a separate AWS account with S3 Object Lock in Compliance Mode. Even if a root user in the main account is compromised, the vault copy remains untouchable and undeletable.

Technical architecture and AWS services

AWS services used in the solution:

- AWS DataSync: The secure data mover handling high-speed migration with end-to-end encryption and bit-perfect integrity verification

- Amazon S3 and Glacier Deep Archive: The "Touch-and-Go" storage foundation; S3 Standard handles hot processing while Deep Archive serves as the lowest-cost "Master Vault”

- AWS Elemental MediaConvert: Automatically creates lightweight H.264 proxy files for instant preview, decoupling discovery from the vaulted master file

- AWS Step Functions: The serverless orchestrator managing the ingest lifecycle, compliance logic and asynchronous restore workflows

- Amazon Bedrock Data Automation: The GenAI engine that "watches" video proxies to extract multimodal insights like celebrity, object and scene detection

- Amazon OpenSearch Service: Stores vector embeddings to enable semantic search, allowing editors to find content by "vibe" or concept rather than just filename

- Amazon AppFlow: Securely ingests external business signals (e.g., Marketing Calendars) to drive the predictive restoration model

- Amazon Athena and AWS Glue: The governance layer that structures metadata and cleans access logs before they reach the ML pipeline

- Amazon SageMaker: Forecasts retrieval demand based on business signals to "pre-warm" assets from the vault ahead of campaigns

- Amazon QuickSight: Provides dashboards analysis on storage unit economics, ingest trends and retrieval SLAs

Value for users: Key benefits unlocked

- Up to 90% faster time-to-market: - Editors and producers can search, preview and initiate restores in minutes via a self-service portal, eliminating the 24-48 hour wait times associated with physical tape requests

- Predictable OpEx model: By eliminating the need for million-dollar hardware refreshes and maintenance contracts, the solution smooths cash flow. Organizations pay only for the storage they use, leveraging Deep Archive to cut run-rate costs by 50-70%

- Instant previews: Lightweight H.264 proxies stay in hot storage, allowing editors to view 20PB of content instantly without triggering a vault restore

- Risk mitigation: The "blind spots" of tape are removed. AWS CloudTrail logs every single access request, creating an immutable audit trail. Combined with S3 durability (11 9s), the risk of data loss or theft is virtually eliminated

- Future-proofing with AI: The archive is no longer a static graveyard. It is a data lake ready for the next wave of innovation, such as using Generative AI to automatically generate trailers, summarize daily rushes, or identify monetization opportunities in back-catalog IP

- The "forever revenue" engine: Transform your archive from a cost center into a licensing storefront. By having assets indexed and cloud-ready, sales teams can instantly package "Flashback Collections" for licensing to social media platforms or FAST channels

- Sustainability as a Service (Green archiving): Align with corporate Net-Zero goals. Moving from on-premise, climate-controlled tape vaults (which consume constant power for cooling and humidity control) to AWS's hyperscale infrastructure efficiency significantly lowers the carbon footprint per terabyte. We optimize storage regions based on carbon intensity, helping you report measurable Scope 3 emission reductions in your annual ESG report

- "Zero-trust" media security: In an era of high-profile media leaks, our solution applies Zero Trust principles to content. Every single view, download, or preview is authenticated via IAM and logged in CloudTrail. Unlike a physical tape sitting on a desk, a digital asset in this system cannot be moved without leaving a forensic digital fingerprint

Call to action: Stop storing, start monetizing!

Assess in weeks:

Our "Archive Discovery" assessment scans your current metadata and storage footprint in 2 weeks to build a TCO ROI model.

Zero-downtime migration:

Our HCLTech "Tape-to-Cloud" factory methodology ensures your production team can keep working while we move the data in the background.

"From dark data to spotlight: Audit, air-lift and activate."

- Audit (assess): Get a TCO and ROI model in 2 weeks with our non-intrusive metadata scanner

- Air-lift (migrate): Execute a Zero-Downtime migration using our "Smart-Queue" ingestion factory

- Activate (monetize): Turn on the GenAI Curator and start licensing your history

Ready to monetize your history?

Studios and Broadcasters, contact us now to schedule a "Mediq-IQ Demo" and see your archive come to life.