The artificial neural network (ANN) is a hot topic of research now, as there is no guarantee that a specific artificial neural network model will give good accuracy of a problem. So, we need an appropriate architecture for the neural system instead of repeated trial and error neural network algorithm modeling. The advantage of the neural network is that it can accurately identify and respond to patterns that are similar but not identical to the trained data.

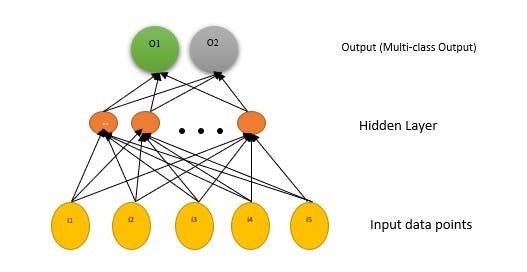

Neural networks are complex machine learning structures that can take in multiple inputs to produce a single output with many hidden layers to process input. All the neurons or hidden nodes in neural networks are connected and influence each other and share the different parts of input data relation. Weights are assigned to the nodes or neurons based on their relative importance with other inputs.

There are two types of communication that can happen in neural networks:

- Feedforward Neural Network: In a feedforward neural network, the data or signal travels in only one direction toward the result or target variable.

- Feedback Neural Network: In this, data or signals travels in both directions through the hidden layers.

Deep learning using neural networks mainly concentrates on the following core neural network categories:

- Recurrent Neural Network

- Convolutional Neural Network

- Multi-Layer Perceptron

- Radial Basis Network

- Generative Adversarial Network

Neural network architecture needs the following design decision parameters:

- Number of hidden layers (depth)

- Number of units per hidden layer (width)

- Type of activation function (nonlinearity)

- Form of the objective function (Learning rules)

- Learnable parameters (Weight, bias)

Taking a simple example, if a rocket has to land in Mars, it needs to have different components to function properly, let’s say, a central processor, engine components, fuel, avionics and payload, and sensor components such as altitude, velocity, pressure, weigh cells, thrust, etc. We can label these different components and their associated features as “neurons.” Then, the values correspond to velocity, pressure, altitude, and trajectory, weight cells, fuel efficiency, etc., are “weights”. How the weights, along with neurons, are functioning to bring the rocket to Mars can be considered as the activation function. Finally, how well the rocket lands on Mars is the final output. Image classification, medical supervision, and vehicle movement from the source to reach a destination with intelligent guidance are some of the neural network use cases.

So, why do we need the ideal architecture for neural networks? Because, the best neural network architecture will get a near-perfect, accurate prediction model for data points in real-world scenarios.

Please check Part-2 for information on neural architecture search.

References

- https://arxiv.org/pdf/1806.10282.pdf

- https://arxiv.org/pdf/2006.02903.pdf

- https://towardsdatascience.com/getting-started-with-autokeras-8c5332b829

- Interstellar: Searching Recurrent Architecture for Knowledge Graph Embedding

- Efficient Neural Architecture Search for End-to-end Speech Recognition via Straight-Through Gradients