- Orchestrating multiple AI agents across clouds is key to moving beyond single “bots” and automating end-to-end business workflows

- The orchestration layer plans tasks, routes work to the right agents, enforces policy/guardrails (security, cost, compliance), shares context and escalates to humans when needed

- Open standards, like Google’s Agent-to-Agent protocol and the Model Context Protocol, are emerging to enable secure interoperability between vendors and clouds

- Benefits include interoperability, richer automation (supervisor/conductor agents) and safer scalability through observability and cost governors

- Top challenges: avoiding vendor lock-in, identity/security expansion, data governance/sovereignty, limited observability and cost/performance control, with each requiring explicit design patterns

- Recommended practices: standardize interfaces early, design for hybrid autonomy, secure by design, with OWASP LLM Top 10, instrument everything and continuously red-team/chaos-test

- Real-world uses span customer-service “swarms,” financial onboarding, FinOps and supply-chain/field ops, with orchestration moving from pilots to production

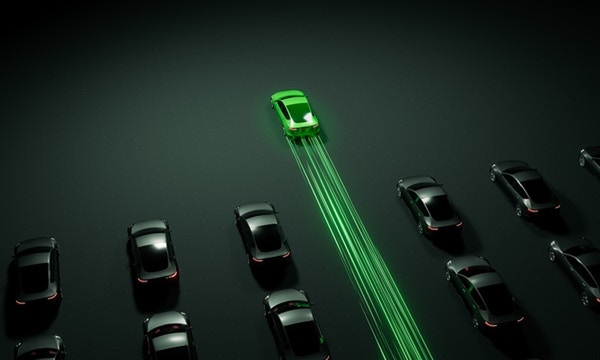

- Multicloud offers choice, while orchestration turns that diversity into governed, repeatable outcomes at enterprise scale.After experimenting with isolated bots, businesses are continuing to innovate and transform by relying on AI agents. Now, with multiple intelligent agents, businesses are looking to orchestrate or enable interoperability between agents to automate larger processes

What is agent orchestration?

“In Agentic AI, the orchestration of agents is the discipline of coordinating multiple autonomous agents, tools and services, often across different clouds, so they can collaborate to achieve a specific business outcome. Agent orchestration also provides guardrails for security, cost and compliance” - Piyush Saxena, SVP and Global Head, Google Business Unit, HCLTech

Everyone has spoken to a ‘procurement assistant’ that can answer a question about a product you are planning to purchase. But, in an orchestrated, multi-agent system, there are multiple agents, each with a specific role that will interoperate – work with – other agents. In this example the agent for procurement communicates with and may share tasks with a contract-analysis agent, a fraud-detection agent and a workflow engine. Orchestration ensures agents share context, hand off work and escalate to humans when needed.

The orchestration layer typically includes planning and routing logic, a policy engine for access and safety, a message bus or protocol so agents can interact with one another, observability for tracing what happened and human-in-the-loop checkpoints for high-stakes steps. In multicloud environments, the agents and the data they use may be sitting on multiple clouds so the orchestration layer requires the ability to normalize identity, provide data access and latency across multiple cloud providers.

This is where emerging standards will have a big impact. Google’s Agent-to-Agent (A2A) protocol aims to let agents from different vendors interoperate securely, a critical step beyond today’s proprietary architectures. There are standards such as the Model Context Protocol (MCP) that expose tools and data sources in a consistent way. The goal is to form an interoperable mesh for agent collaboration.

Agentic AI orchestration in a multicloud ecosystem

“Agent orchestration is important because it unlocks end-to-end automation across heterogeneous clouds, which turns scattered single purpose bots or agents into a coordinated system that is interoperable, compliant and scalable” - Piyush Saxena, SVP and Global Head, Google Business Unit, HCLTech

Most enterprises now run intentional multicloud: different providers for data, analytics or SaaS services. That diversity enables innovation but also creates friction, including identity sprawl, data gravity, inconsistent tooling and duplicated automations.

The HCLTech research, Cloud Evolution: Mandate to Modernize, finds that leaders should prioritize open solutions to tame this complexity, keeping cloud as the core platform for AI innovation. Orchestration standardizes how agents communicate, authenticate, observe and escalate across environments.

Orchestration frameworks: Key benefits

- Improved interoperability: Orchestration enables agents built on different stacks and using standards like A2A/MCP plus cloud native identity to interoperate. For example, a customer-care “swarm” queries ServiceNow and Salesforce, then uses a Google agent to summarize knowledge articles for a personalized response

- Enhanced automation: A conductor agent can plan multi-step tasks, parallelize subtasks, manage tool calls and ask for human approval when thresholds are hit. This is far beyond a linear script. For example, AWS Bedrock multi-agent collaboration dispatches specialized agents (research, finance, legal) coordinated by a supervisor agent to answer complex business questions

- Greater scalability: Observability, policy controls and cost governors in the orchestration layer let you scale multi-agent workloads safely. For example, Vertex AI Agent Builder centralizes runtime controls (threads, tool calls, safety) and integrates with enterprise identity and networking to run agents at production scale.

The bottom line is that multicloud gives enterprises choices, while orchestration ensures a repeatable, governed outcome.

How does agent orchestration work?

The role of the orchestration layer

“The orchestration layer plans the work, routes tasks to the right agent, governs what each agent can see and do and continuously optimizes the workflow based on feedback and policies” - Piyush Saxena, SVP and Global Head, Google Business Unit, HCLTech

At runtime, the conductor ingests a goal, such as ‘resolve this claim’, then decomposes it into steps and allocates the steps to agents based on capability and load. It mediates communication, like messages and tool calls, via a protocol and persists shared context. It then enforces policy, such as identity, permissions and sensitive-data handling, and observes the whole graph for traceability and optimization.

In practice, orchestration handles:

- Task allocation and planning: Breaks goals into sub-tasks, chooses single agent vs. parallel “swarm” and reprioritizes on failure

- Communication and memory: Normalizes agent messages, passes summaries rather than raw data and caches results for reuse

- Guardrails and approvals: Applies role-based access, data redaction and rate limits, while routing high-risk decisions to humans

- Optimization: Tunes prompts/tools, parallelizes safe steps, selects models per task and uses outcome metrics to improve

- Recovery: Detects drift, retries, rolls back side effects and escalates with full trace context

Challenges and best practices for AI agent orchestration

Common challenges in multi-agent orchestration

The top challenges include interoperability gaps, security and trust, data governance, observability and cost control, especially across clouds.

- Interoperability and lock-in: Agents built for one stack can’t easily collaborate with others.

Solution: Adopt the standards mentioned (A2A for agent-to-agent, MCP for tool/data), and keep orchestration logic decoupled from any single provider - Security, identity and trust. Multi-agent systems expand the attack surface area.

Solution: Implement least-privilege identities per agent, scope tokens, runtime output validation and red-teaming. OWASP’s LLM Top 10 is a useful baseline. Recent analyses of A2A propose improvements like short-lived tokens and granular scopes. - Data governance and sovereignty. Agents moving across clouds risk policy violations.

Solution: Enforce data-handling policies in the orchestrator, use policy-as-code and prefer local processing where laws require - Observability and audit. Debugging swarms without traces is risky.

Solution: Record prompt/response/tool call lineage for each step and require deterministic fallbacks for critical paths. - Cost sprawl and performance. Parallelism is powerful and expensive.

Solution: Cap per-task budgets, cache intermediate results, route models by task complexity and test “narrow agents” for simpler steps first

Best practices for effective orchestration

- Design for hybrid autonomy: Combine LLM-planned steps with code-orchestrated sagas to balance flexibility and control

- Standardize interfaces early: Use A2A/MCP so teams can add agents and tools without refactoring pipelines

- Secure by design: Apply OWASP LLM Top 10 controls, secrets isolation per agent, runtime guardrails and approval gates for sensitive actions

- Instrument everything: Trace every agent handoff and tool call, log costs and capture evaluation metrics, such as accuracy, cycle time and deflection

- Pilot with a cross-functional squad: Blend platform engineering, security, domain SMEs and change management so orchestration fits the operating model

- Continuously test and red-team: Simulate attacks and chaos-test agent graphs before scaling

Real-world application of AI agent orchestration

Use cases across industries

- Customer service “swarms”: A supervisor agent triages intent, a knowledge agent retrieves answers, a policy agent checks entitlements and an actions agent submits cases, all while escalating exceptions to humans. Google has published guidance and GA support for multi-agent collaboration in such scenarios, showing how specialized agents coordinate to improve first-contact resolution and reduce handle time

- Financial services onboarding: Across different clouds, orchestrated agents gather KYC documents, verify identity via third-party tools, check sanctions lists and open accounts in core banking. The orchestrator guarantees auditability and data-residency controls across jurisdictions

- Field ops and supply chain: A FinOps solution leverages cost analyzer agent to track spend, resource optimizer to recommend efficient usage, budget enforcer and forecast agents to alert budget breaches and predict future costs respectively and policy compliance agent to ensure adherence to policies. Supply chain solution agents look at IoT signals, predict failures, order parts and schedule technicians, while coordinating ERP, logistics and maintenance platforms

HCLTech and partners are already packaging industry and workflow-specific agents to minimize manual work and elevate decision quality across such use cases, which is evidence that orchestration is moving from pilots to production.

Future trends in AI agent orchestration

- Protocol convergence: Wider adoption of A2A and MCP enables a vendor-neutral mesh of agents and tools across clouds and endpoints (even Windows desktop components)

- Managed agent platforms: Expect deeper, enterprise-grade runtimes with identity, tool gateways and observability out of the box, such as Google's Vertex AI Agent Engine

- Deterministic and agentic hybrids: Orchestrators will mix LLM planning with deterministic workflows for reliability, especially in regulated processes

- Security-first orchestration. OWASP and cloud providers will harden guidance for excessive autonomy and sensitive-data exposure and secure A2A implementations will mature

The symphony of AI agents

To compete in the Agentic AI era, enterprises must move from talented soloists to a well-conducted orchestra. AI agent orchestration provides a smart conductor to translate business goals into plans, cue the right specialists, enforce the score (policy) and keep time, cost and SLAs across multicloud stages. With open protocols like A2A and MCP, managed runtimes in every cloud and proven design patterns, the technology stack is ready. The differentiator is how you compose and perform.

Start with one high value use case, such as customer service, claims or field ops, before proving the value and then scaling the solution. Open, consistent approaches cut friction and accelerate outcomes. Our guidance: define your orchestration blueprint, pilot with measurable KPIs and build a secure, observable mesh of agents that turns cloud choice into business advantage.

FAQs

What is AI agent orchestration?

AI agent orchestration is the coordinated management of multiple autonomous agents, tools and workflows, often across different clouds, to deliver an end-to-end outcome with shared context, policy and observability. It goes beyond single bots by planning, routing, governing and optimizing multi-step, multi-agent work.

How is AI agent orchestration different from RPA or a single chatbot?

RPA automates fixed tasks and a lone chatbot answers in one domain. Orchestrated agents collaborate, reason, call tools, hand off work and involve humans when needed; combining autonomy with governance for complex, cross-system workflows.

Why is AI agent orchestration critical in multicloud?

Multicloud gives you best-of-breed services, but also identity, data and tooling fragmentation. Orchestration normalizes these differences, enabling interoperable agents that work across providers while enforcing security and compliance.

Which standards and protocols should enterprises care about when adopting AI agents at scale?

Two to watch: A2A for agent-to-agent collaboration across vendors and MCP for exposing tools and data to agents consistently. Together, they reduce custom glue code and prevent lock-in.

What are the primary security risks of adopting AI agents?

Multi-agent systems introduce risks like prompt injection, sensitive-data leakage and excessive autonomy. Mitigations include least-privilege identity per agent, scoped tokens, runtime output filtering and human approval gates.

Which platforms should I evaluate when adopting AI agents?

For Google-centric estates: Agentspace and Vertex AI Agent Engine with A2A. For Microsoft: Azure AI Foundry Agent Service and Copilot Studio. For AWS: Bedrock Agents, AgentCore and Step Functions for deterministic flows. Choose based on data gravity, governance needs and interoperability requirements.

How can enterprises get started on their AI agent journey and how can they measure success?

Pick one high-impact use case, define guardrails and KPIs, instrument every agent handoff for traceability and iterate. Expect quick wins from parallelization and tool reuse, then scale patterns organization-wide.

Listen to article

Listen to article