EU AI Act: Staying compliant in an AI-driven world

Introduction

Artificial Intelligence (AI) has grown significantly, reshaping numerous industries and daily routines. It offers advantages like enhanced efficiency, personalized experiences and medical breakthroughs but also raises concerns about job displacement, privacy and bias. Achieving a harmonious blend of AI's potential and confronting its challenges is essential for a responsible and inclusive future, prompting a broader focus on AI safety discussions and the necessity of regulating the AI sector. As an initial stride in this direction, the European Parliament has given the green light to its draft regulation, the EU AI Act. By keeping ourselves up to date on these advancements, we can help meet the compliance obligations related to AI systems by:

- Providing regulatory guidance and support

- Developing policies and procedures aligned to the EU AI Act’s provisions

- Advising on data governance and incorporating AI-specific risks into the broader risk management framework

Why AI Act?

While AI can be automated to perform all the tasks we give it, human oversight helps to mitigate risks, enhance decision-making and maintain a balance between its capabilities and ethical considerations. Pain points that can be addressed through human intervention include:

- Ethical considerations: Ensuring AI-driven actions align with societal values, ethical standards and legal regulations

- Bias and fairness: Detecting and rectifying bias to ensure fairness and prevent discriminatory outcomes

- Unforeseen situations: Addressing scenarios AI hasn't encountered —this includes identifying and preventing potential unintended consequences and side effects from unforeseen interactions

- Interpretability: Interpreting and explaining AI-generated outcomes to users and stakeholders

- Accountability: Humans remain accountable for the actions of AI systems, so oversight ensures that responsibility can be attributed in case of errors or adverse outcomes

- Legal and regulatory compliance: Ensuring compliance with all applicable legal and regulatory frameworks

The European Commission introduced the proposal for a framework to regulate AI systems in April 2021. This regulation aims to ensure safe, transparent, traceable, non-discriminatory and environmentally friendly AI usage.

Scope of the Act

The scope of the Act is extensive, encompassing individuals and entities offering products or services leveraging AI. It applies to systems producing outputs like content, predictions, suggestions and impactful decisions within various contexts. Additionally, the Act will scrutinize the usage of AI in public sector operations and law enforcement activities.

The objective of the AI Act is to:

- Facilitate investment and innovation in AI

- Ensure products and solutions are safe and compliant with the EU Law

- Enforce fundamental rights and safety requirements and enhance governance

- Prevent market fragmentation with a single lawful market

The EU AI Act applies to providers within the EU, non-EU countries selling in the EU and users within the EU. It governs AI system creation, distribution and utilization, aiming to ensure responsible and accountable AI practices across development, deployment and use.

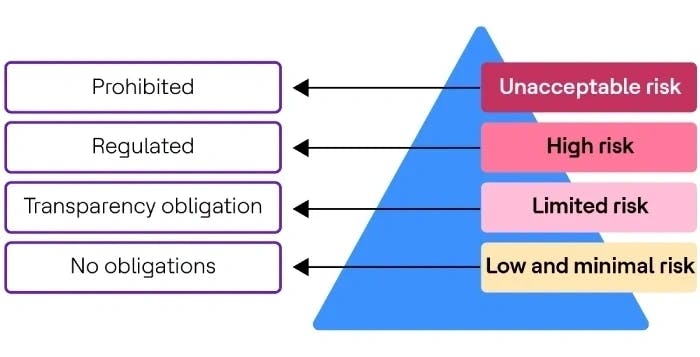

The Act ensures that a risk-based approach must be followed while developing or using an AI system. This approach classifies the AI systems based on the risks they pose:

Any system that falls under the ‘Unacceptable risk’ category will be banned. Reasons for being categorized as an unacceptable risk include:

- Systems that are harmful or manipulative

- Those that exploit vulnerable groups, including mental or physical disability

- Systems that are used for social scoring purposes by public authorities

- 'Real-time' remote biometric identification systems in publicly accessible spaces for law enforcement purposes, except in a limited number of cases

Systems that fall into the ‘High risk’ category include those that could put health, safety and fundamental rights at risk, such as:

- A system that could determine access to education

- Critical infrastructure where the AI system could risk people’s lives and health

- Essential private and public services

- Law enforcement and justice

- Migration and border control

The high-risk systems would need to register their plans on an EU-wide database and perform conformity assessments to show that they comply with all requirements. These assessments can be self-conducted or facilitated by regulatory bodies, depending on the functional area of work and the already existing regulatory framework that governs the sector. These systems also require a risk management system to identify, track and mitigate risks. They also need to set up data governance controls with detailed technical documents with automatic event logging capabilities.

‘Limited risk’ systems, such as chatbots, interact with humans and are used for emotion recognition, generating and manipulating images, audio and video. These systems have transparency obligations similar to GDPR that ensure users know they are interacting with an AI, the PII data being collected, its purpose, etc.

All other systems are categorized as low-risk systems and have no legal obligations to be met.

Additionally, to oversee the uniform implementation and enforcement of this Act, a European Artificial Intelligence Board will be set up to provide recommendations and guidance when issues arise that require oversight and review. Any infringement of this Act can incur heavy penalties on the system owner, depending on the severity of the non-compliance. The penalty amount can go up to $30 million or 6% of the total worldwide annual turnover.

Though this legal framework is yet to be approved and adopted, it will be a game-changer towards the development and use of artificial intelligence. This Act aims to ensure AI systems can be used to their full potential while staying within legal and ethical boundaries and without hindering fundamental human rights and safety.